Data Visualization

Chapter 1. Fundamentals of Graphical Practice

Iñaki Úcar

Department of Statistics | uc3m-Santander Big Data Institute

Master in Computational Social Science

Licensed under Creative Commons Attribution CC BY 4.0 Last generated: 2023-09-27

Graphical Perception

Theory, Experimentation

and its Application to Data Display

Introduction

Cleveland, W. S. (1985) The elements of graphing data. Wadsworth Inc.

Introduction

Cleveland, W. S. (1985) The elements of graphing data. Wadsworth Inc.

- When a graph is constructed, quantitative, categorical and ordinal data is encoded by symbols, geometry and color.

Introduction

Cleveland, W. S. (1985) The elements of graphing data. Wadsworth Inc.

When a graph is constructed, quantitative, categorical and ordinal data is encoded by symbols, geometry and color.

Graphical perception is the visual decoding of this encoded information.

Introduction

Cleveland, W. S. (1985) The elements of graphing data. Wadsworth Inc.

When a graph is constructed, quantitative, categorical and ordinal data is encoded by symbols, geometry and color.

Graphical perception is the visual decoding of this encoded information.

A graph is a failure if the visual decoding fails.

- No matter how intelligent the choice of information.

- No matter how ingenious the encoding of information.

- No matter how technologically impressive the production.

Introduction

Cleveland, W. S. (1985) The elements of graphing data. Wadsworth Inc.

When a graph is constructed, quantitative, categorical and ordinal data is encoded by symbols, geometry and color.

Graphical perception is the visual decoding of this encoded information.

A graph is a failure if the visual decoding fails.

- No matter how intelligent the choice of information.

- No matter how ingenious the encoding of information.

- No matter how technologically impressive the production.

Informed decisions about how to encode data must be based on knowledge of the visual decoding process.

Introduction

Cleveland, W. S. (1985) The elements of graphing data. Wadsworth Inc.

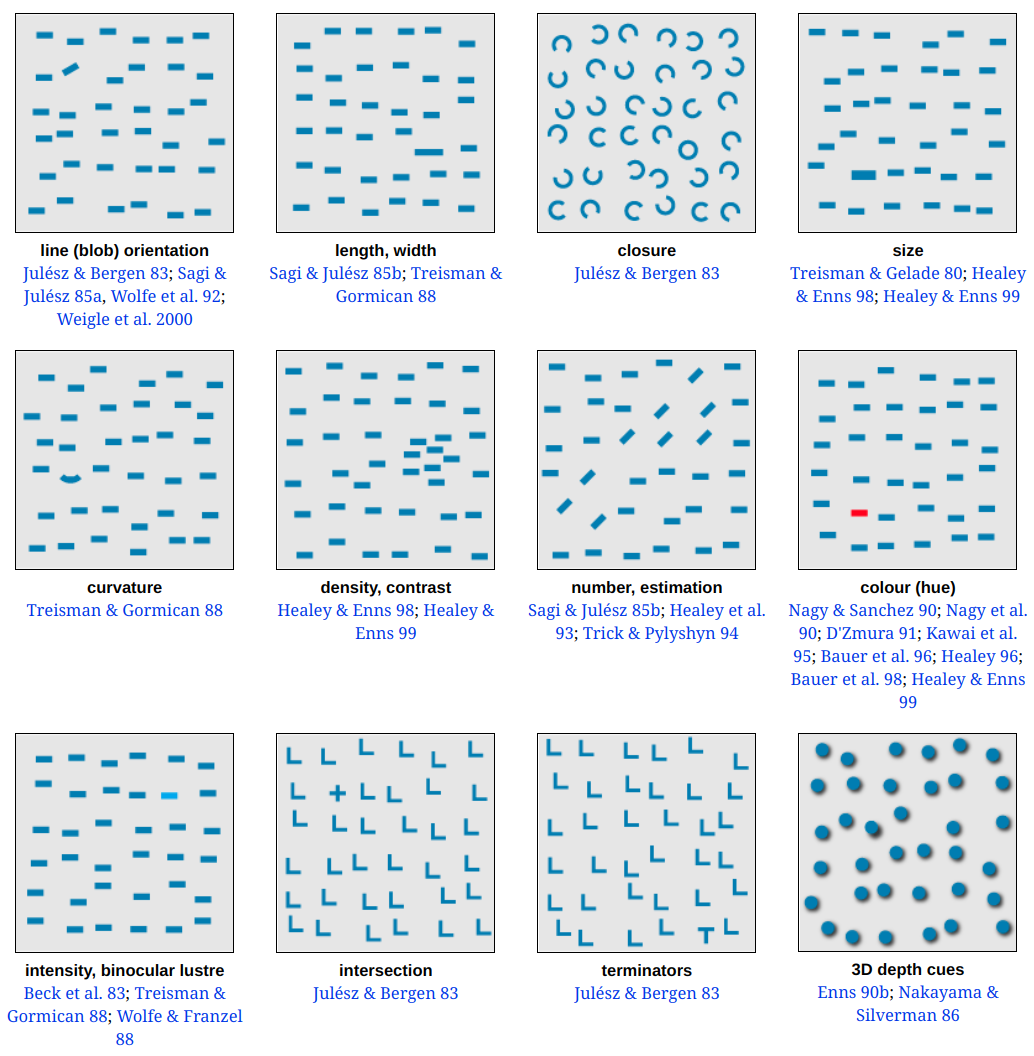

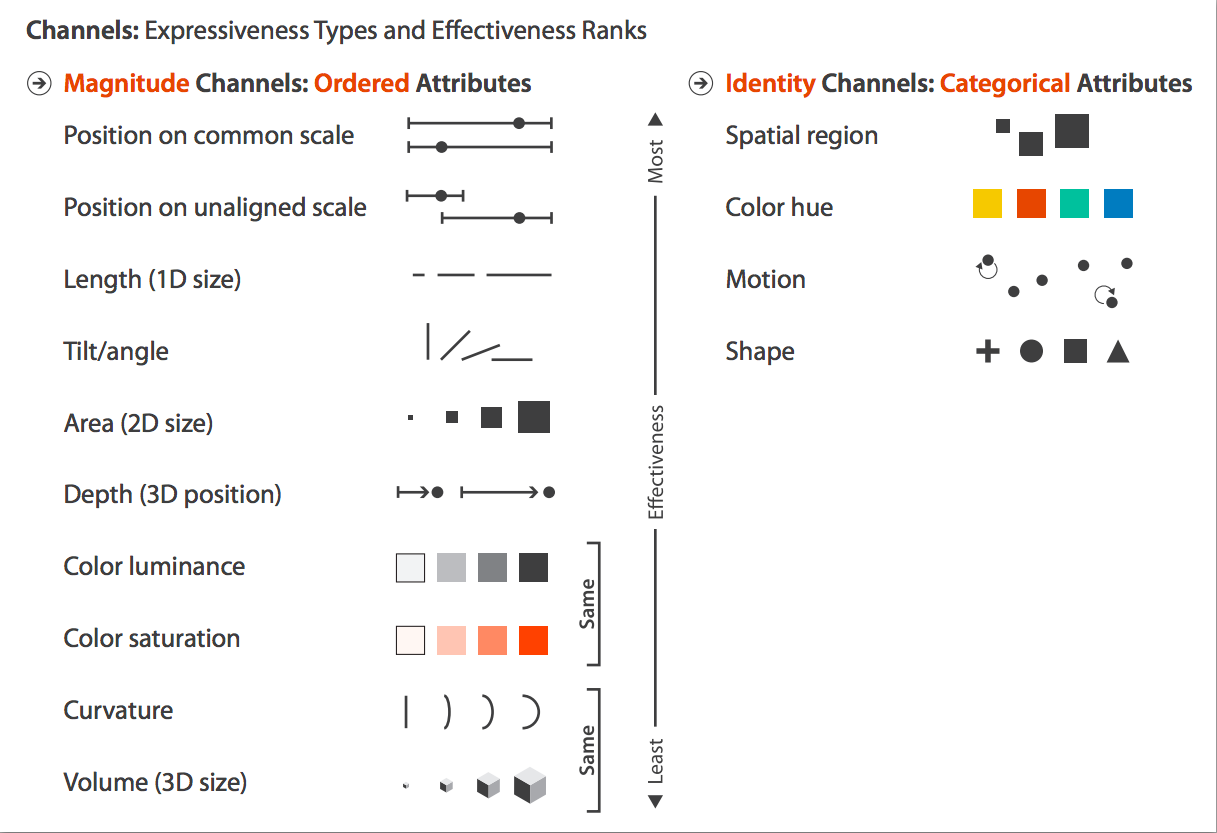

Paradigm

A specification of elementary graphical-perception tasks (channels),

and an ordering of the tasks based on effectiveness.- Related to pre-attentive vision

- As opposed to graphical-cognition tasks

A statement on the role of distance in graphical perception.

A statement on the role of detection in graphical perception.

The paradigm leads to principles of data display.

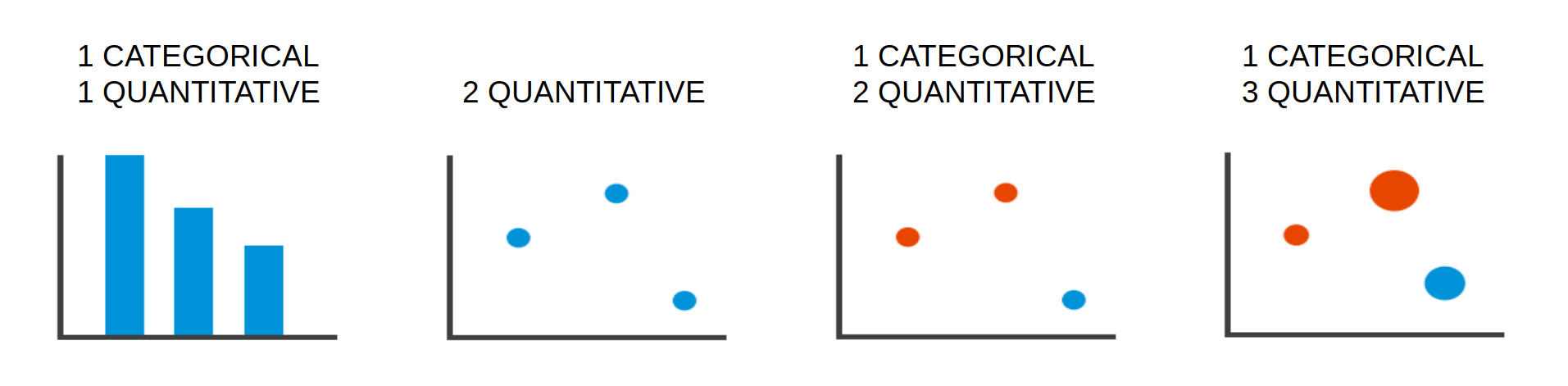

Elements of Visual Encoding

Visual encoding is the (principled) way in which data is mapped to visual structures:

- From data items to visual marks

- From data attributes to visual channels

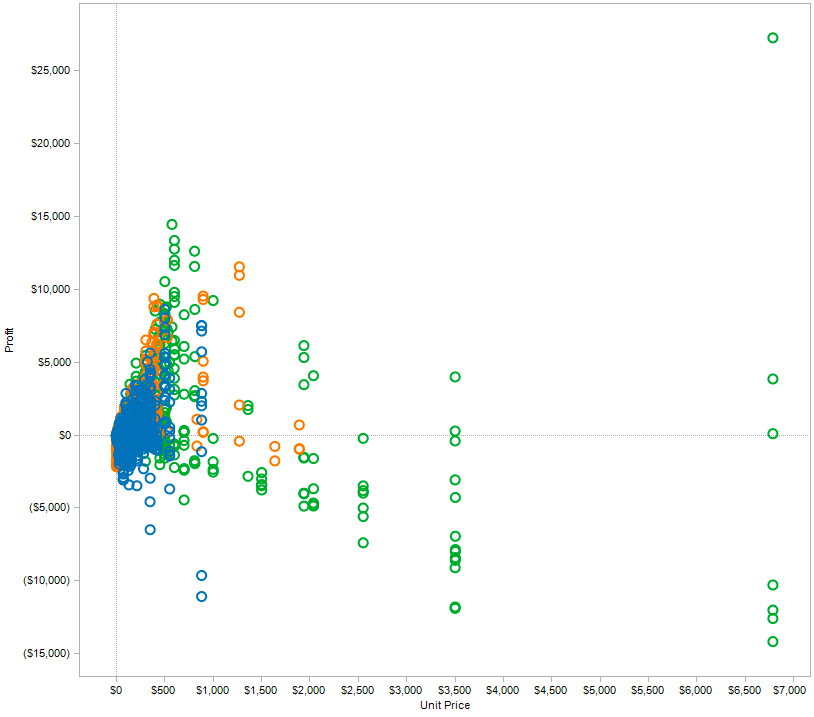

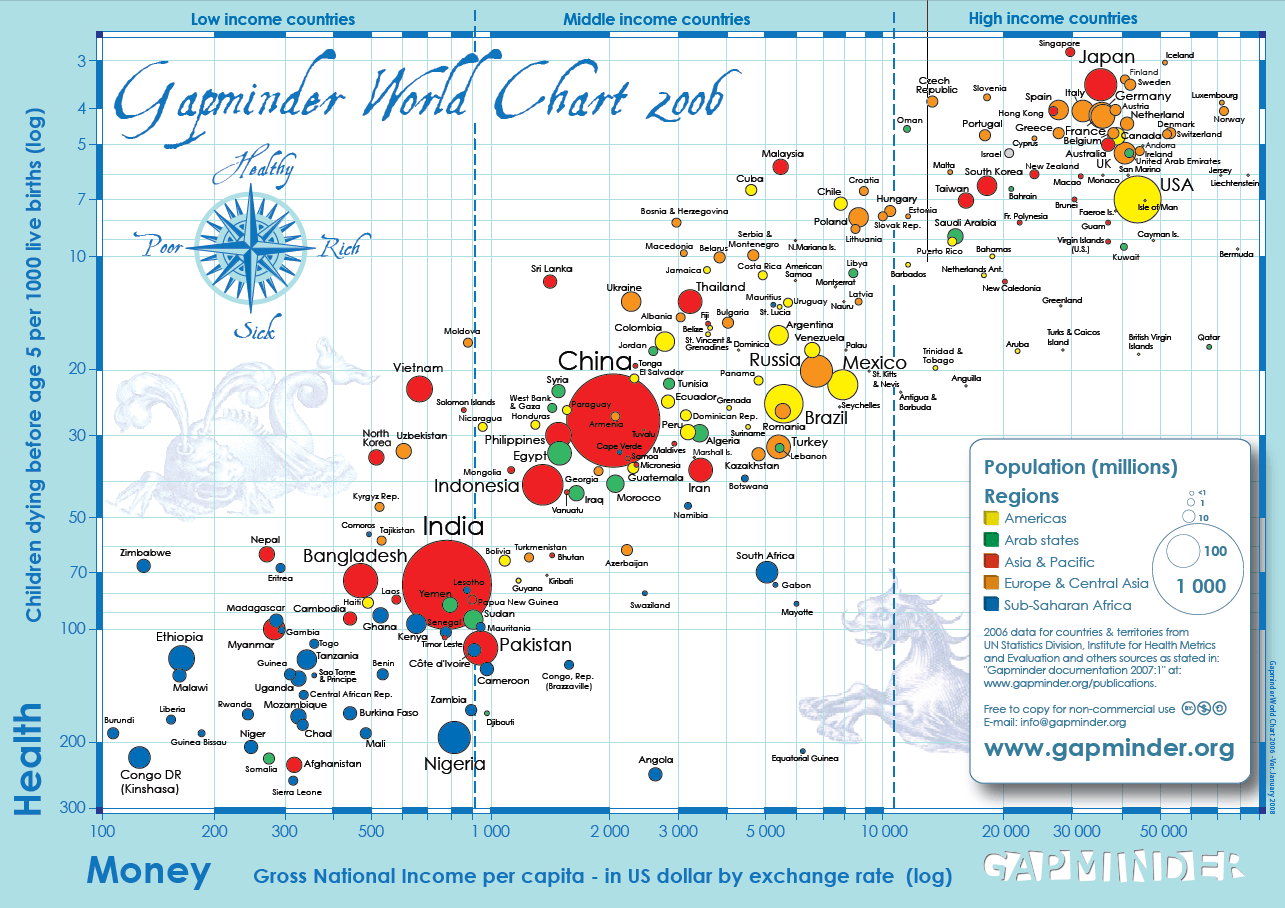

Elements of Visual Encoding

- Data items: sales

- Data attributes: price, profit, product type

- Visual marks: point

- Visual channels: xy position, color

- Encoding rules:

- sale => point

- price and profit => xy position

- product type => color

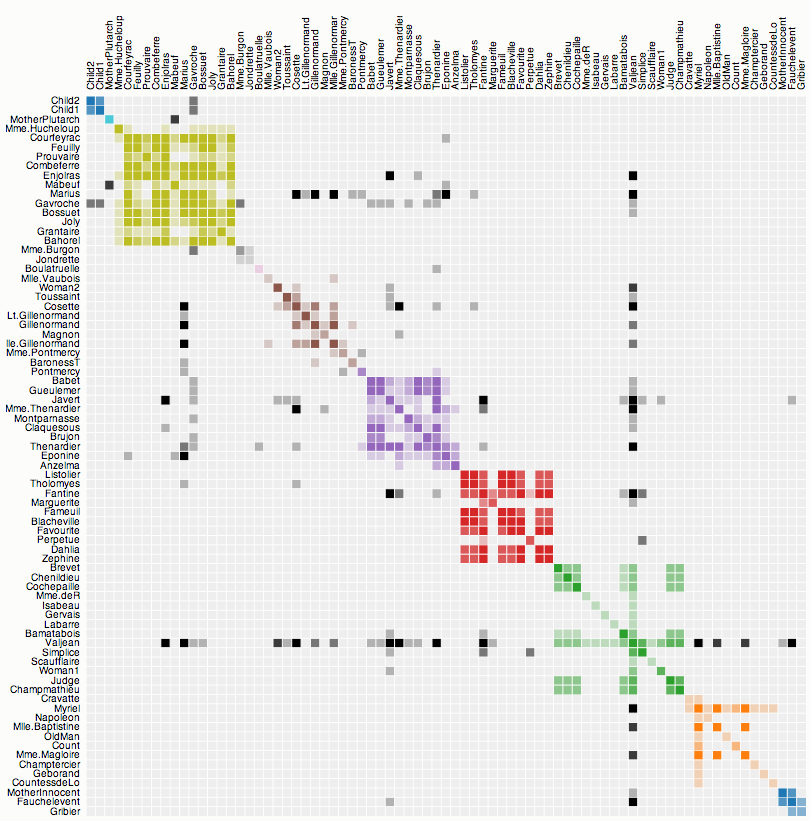

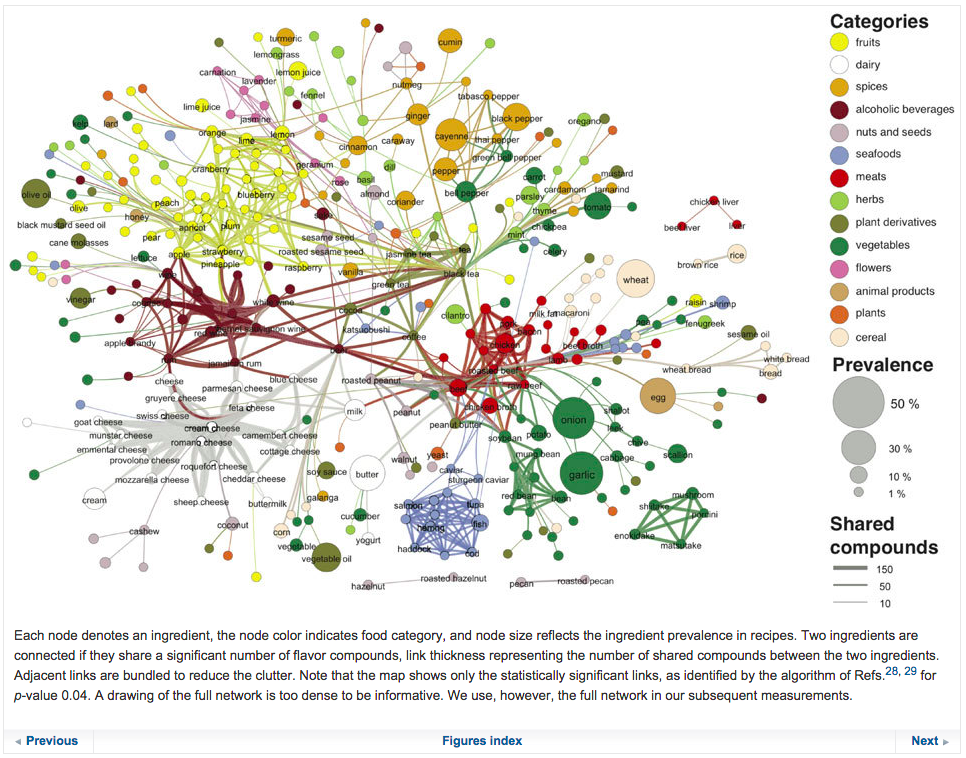

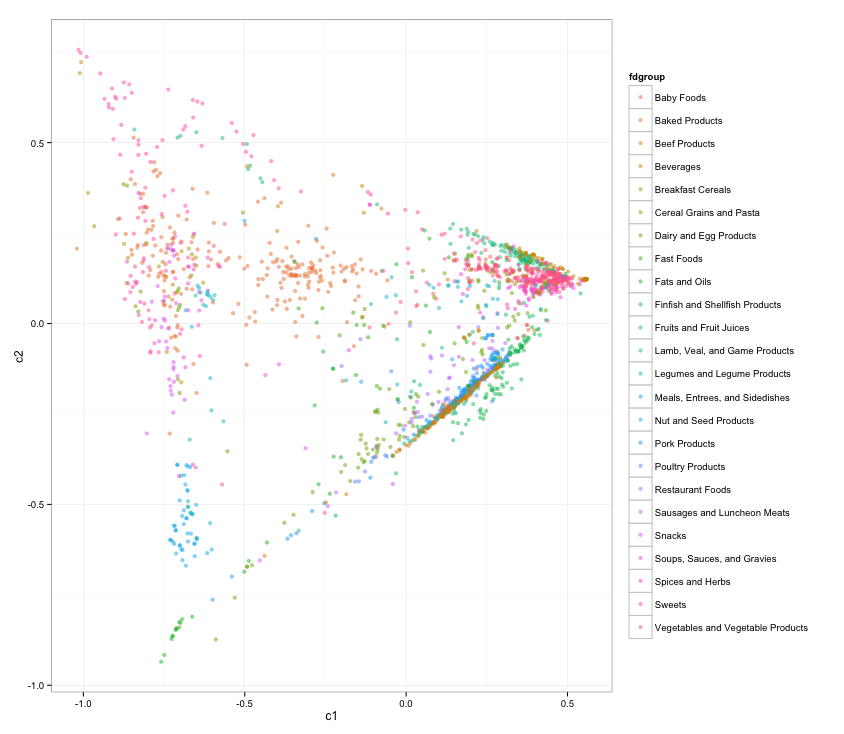

Elements of Visual Encoding

- Data items: co-occurrences

- Data attributes: name, cluster, frequency

- Visual marks: point

- Visual channels: xy position, hue, intensity

- Encoding rules:

- co-occurrences => point

- name => xy position

- cluster => hue, containment

- frequency => intensity

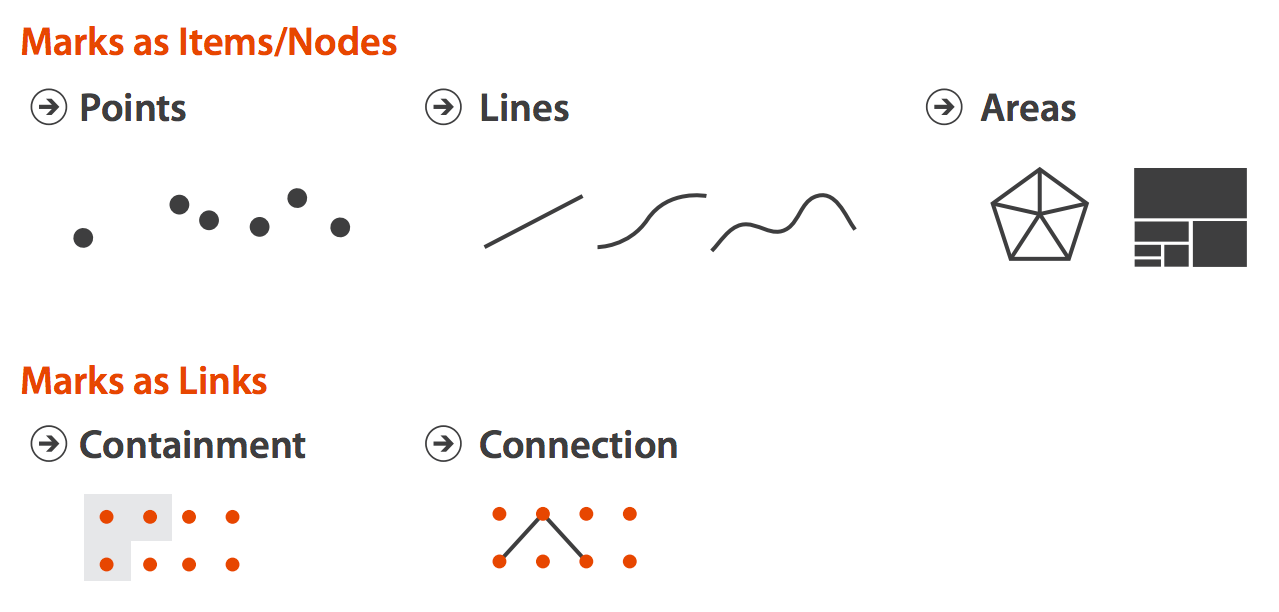

Elements of Visual Encoding

Visual marks are the basic visual objects/units that represent data objects visually

Elements of Visual Encoding

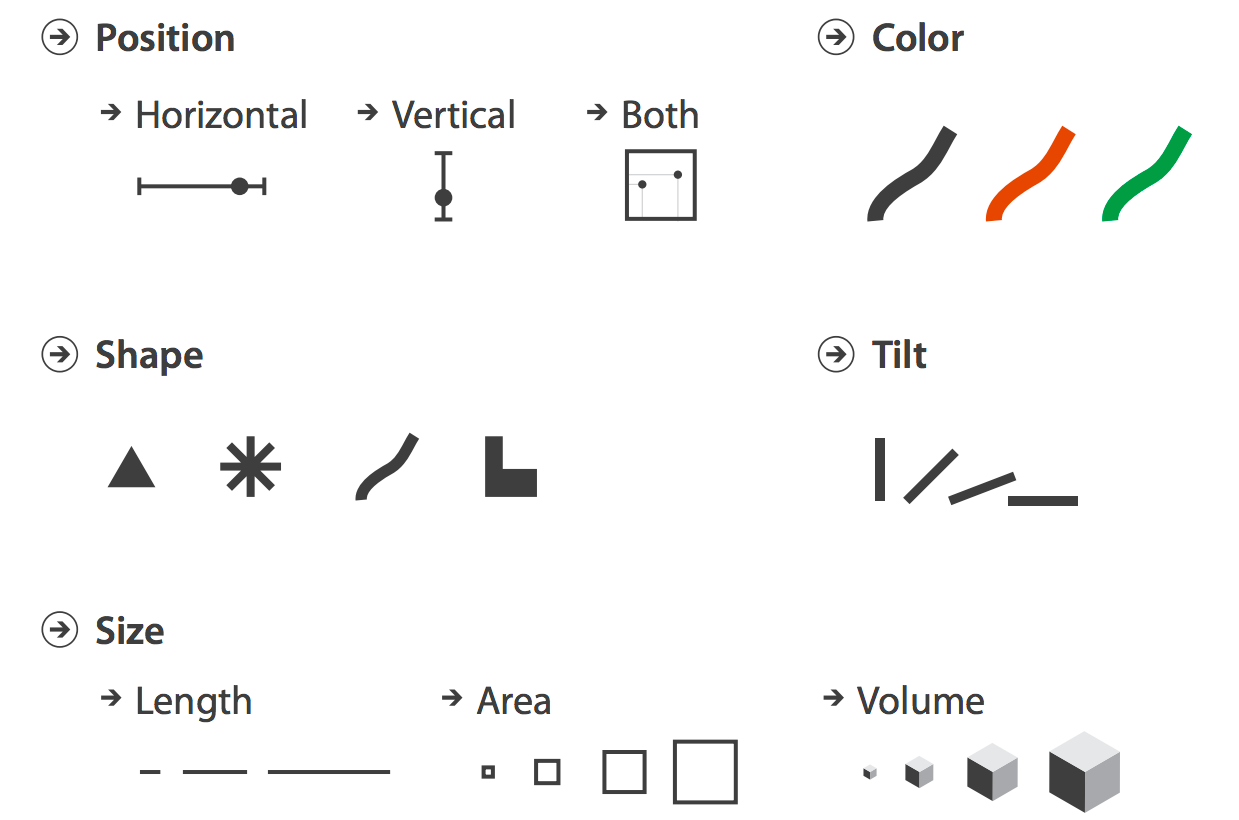

Visual channels are visual variables we can use to represent characteristics of visual objects

Elements of Visual Encoding

Elements of Visual Encoding

Identity channels: information about what, who, where something is

- Example: color hue is suitable for categories

Magnitude channels: information about how much

- Example: position is suitable for quantities

Elements of Visual Encoding

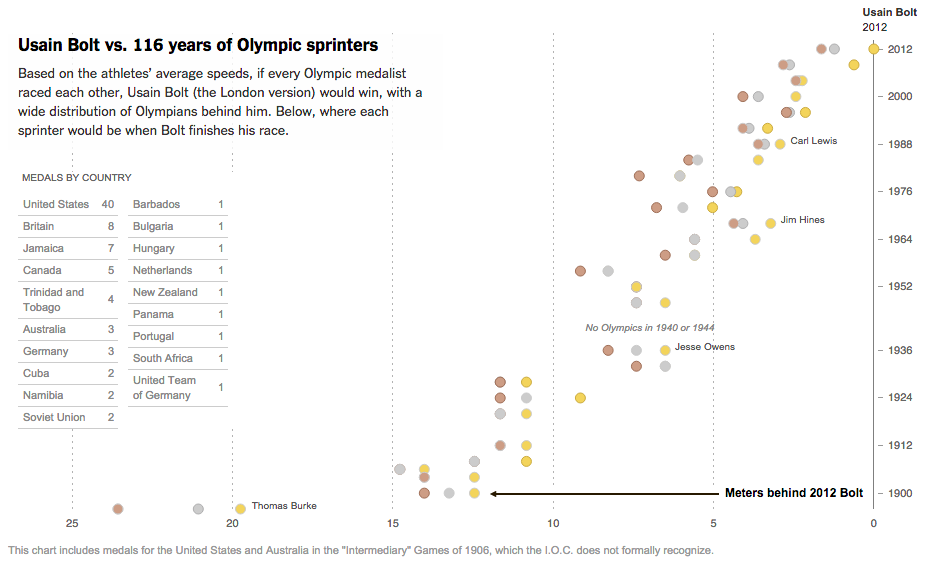

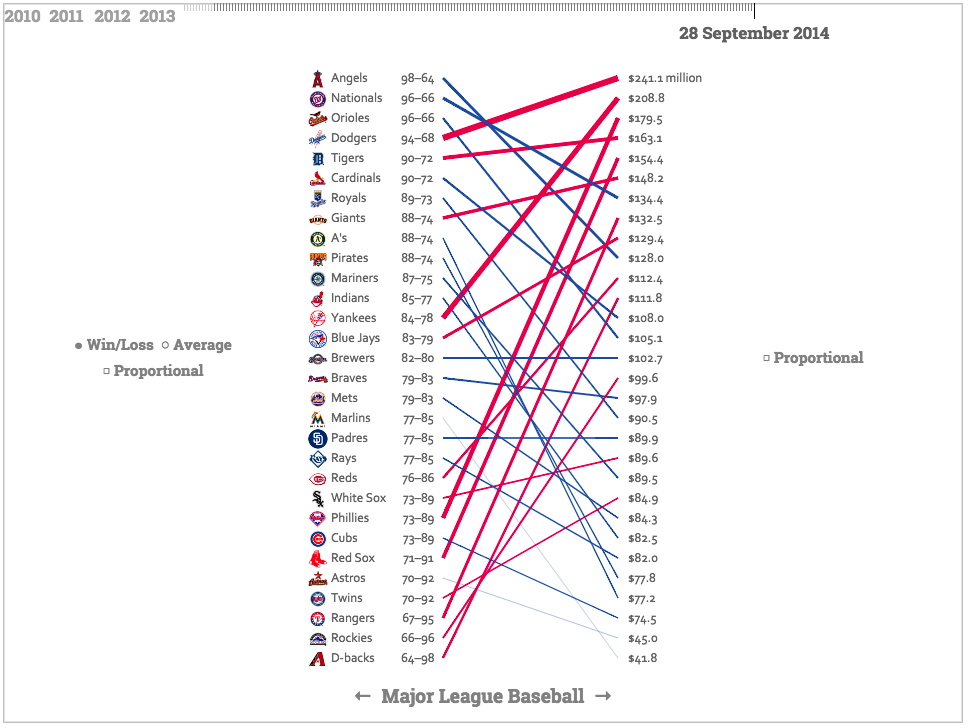

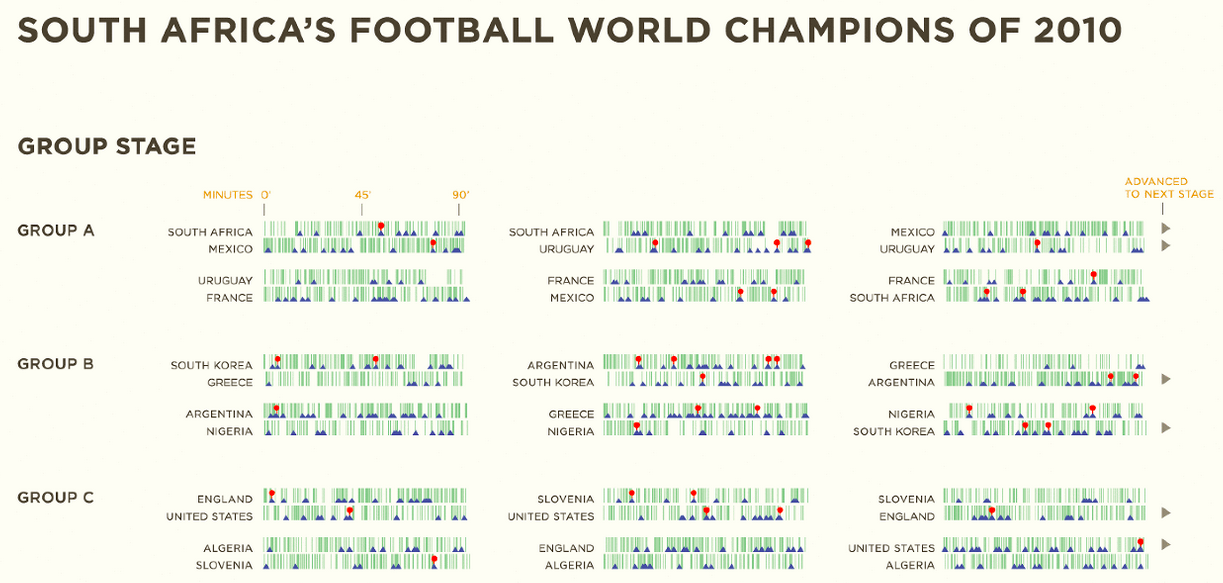

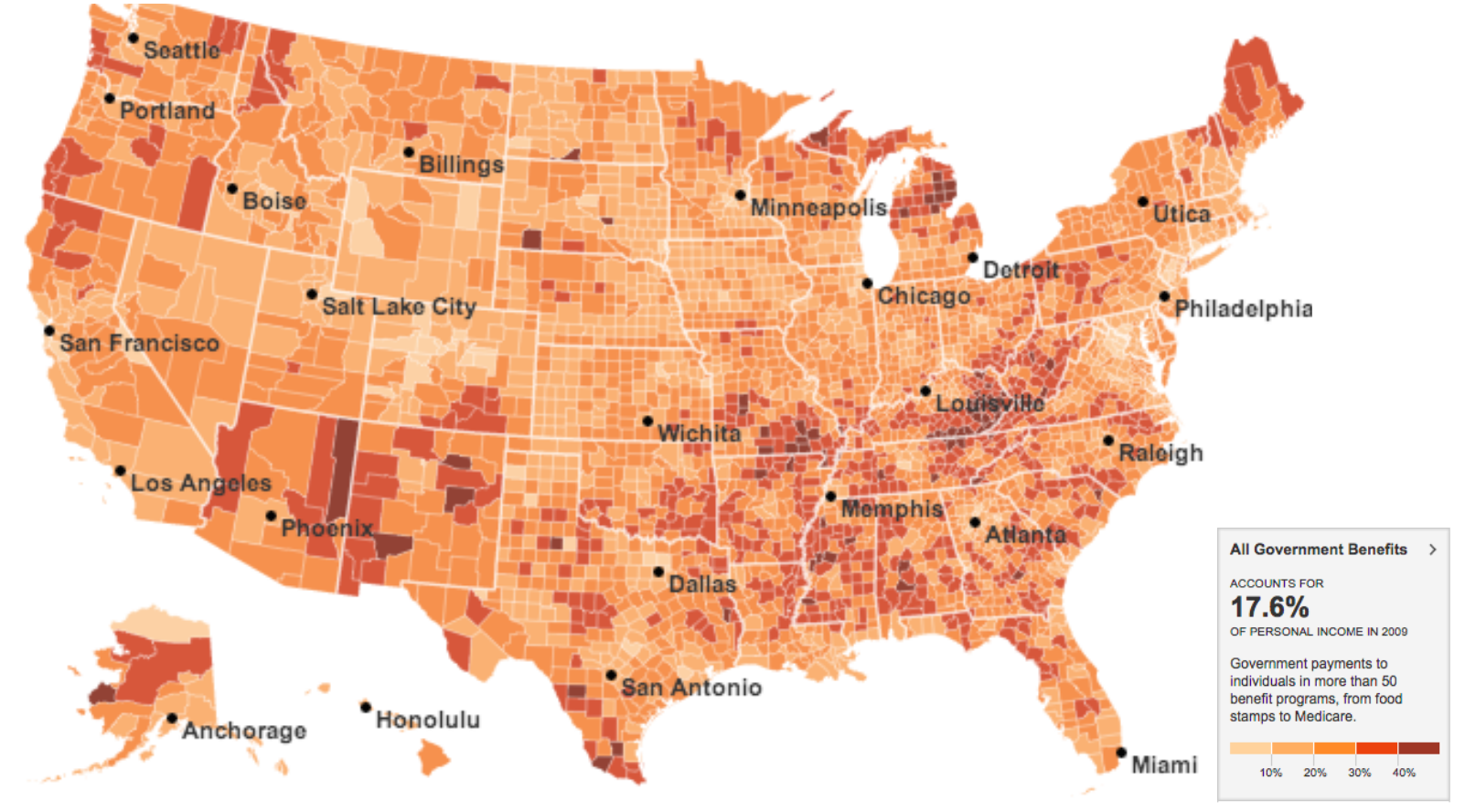

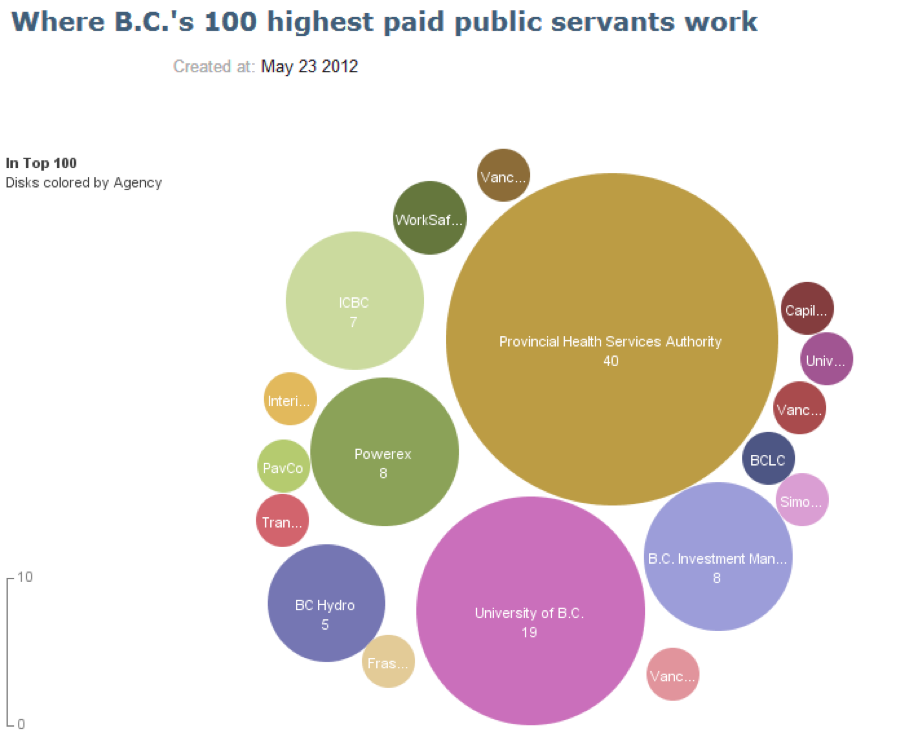

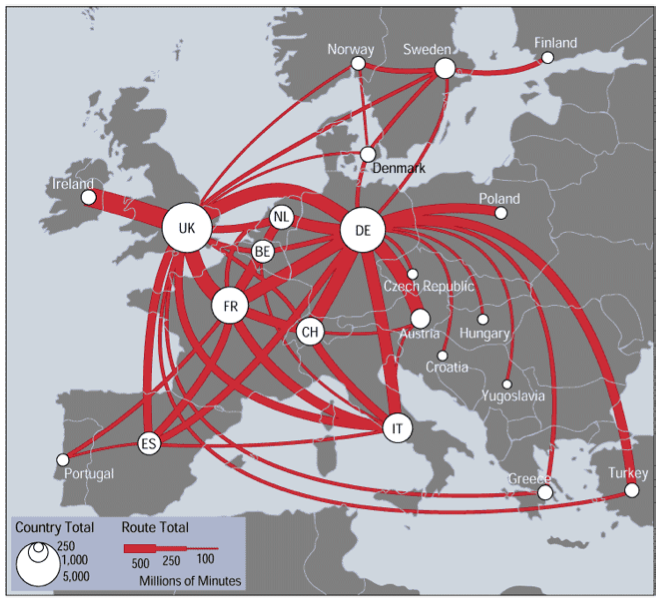

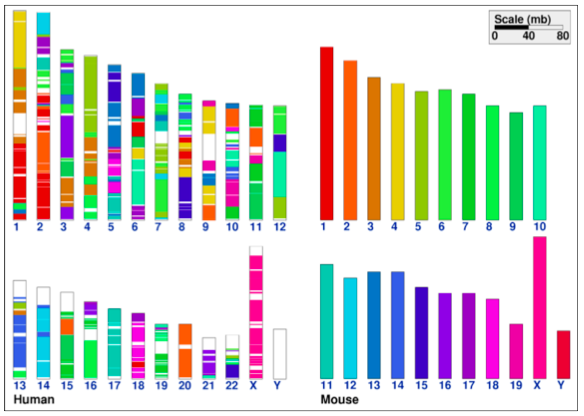

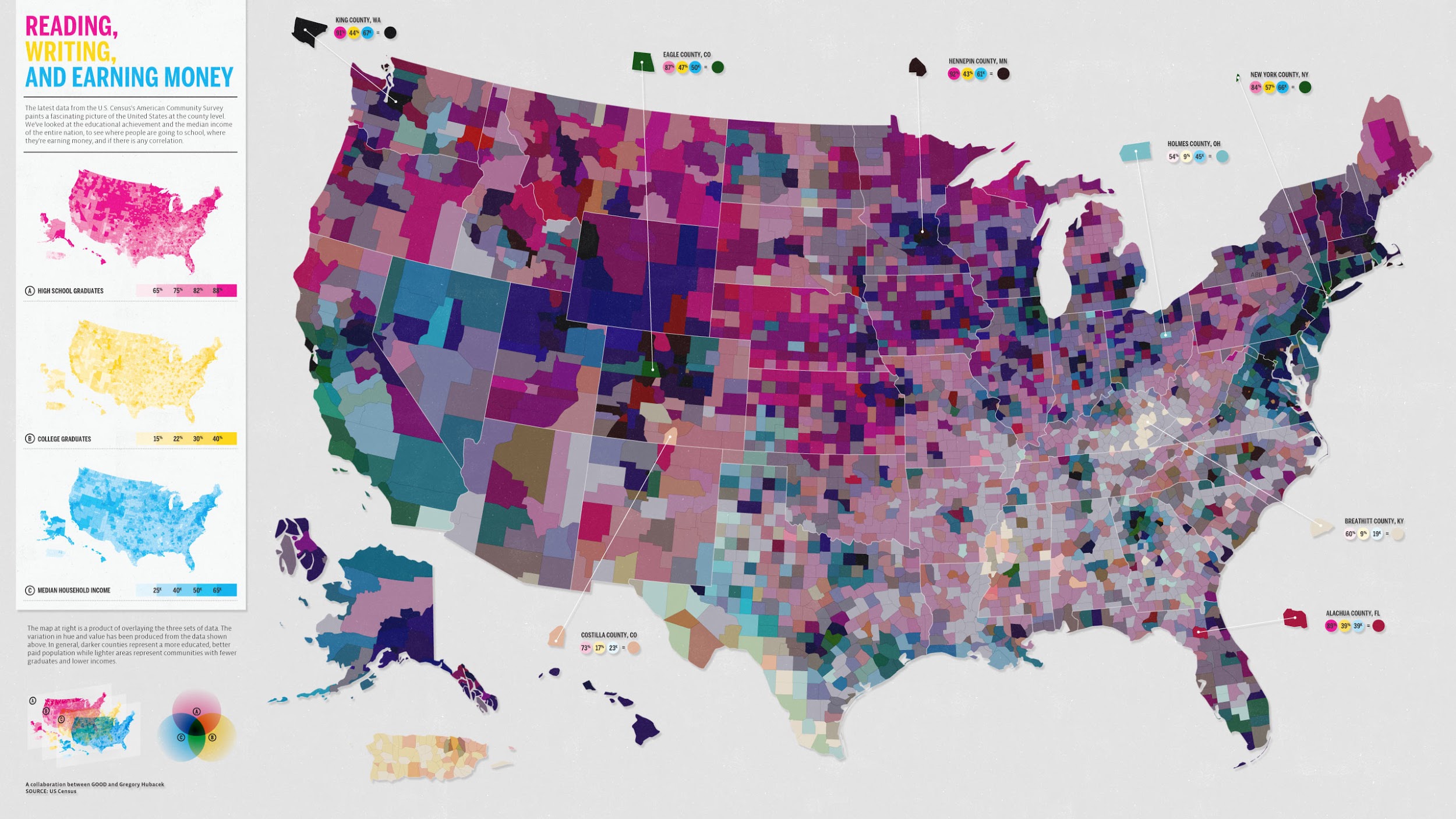

For each of the following, identify:

- Data item 1 => visual mark 1

- Data item 2 => visual mark 2

- ...

- Data attribute 1 => visual channel 1

- Data attribute 2 => visual channel 2

- ...

Source: New York Times

Source: Gapminder

Source: Fathom

Source: Cargo Collective

Source: New York Times

Source: Nature

Expressiveness Principle

Visual information should express all and only the information in the data

E.g., ordered data should not appear as unordered or vice versa

Example: What Does Position Encode?

Effectiveness Principle

The importance of the information should match the salience of the channel

Effectiveness Rank

Channel Effectiveness

Accuracy: How accurately values can be estimated

Discriminability: How many different values can be perceived

Separability: How much interaction there is with multiple encodings

Popout: How easy it is to spot some values from the rest

Grouping: How good a channel is in conveying groups

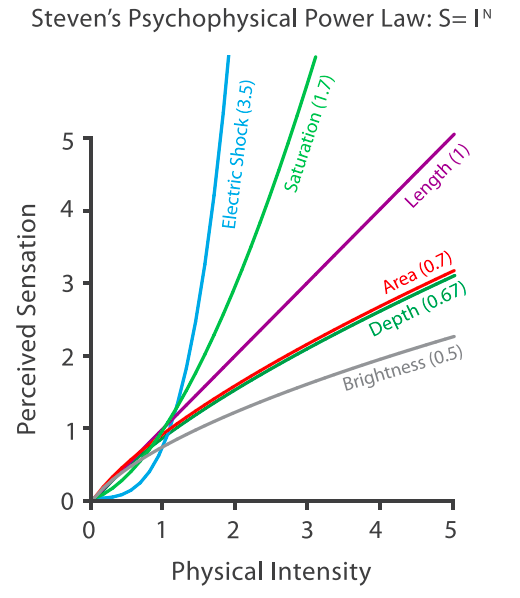

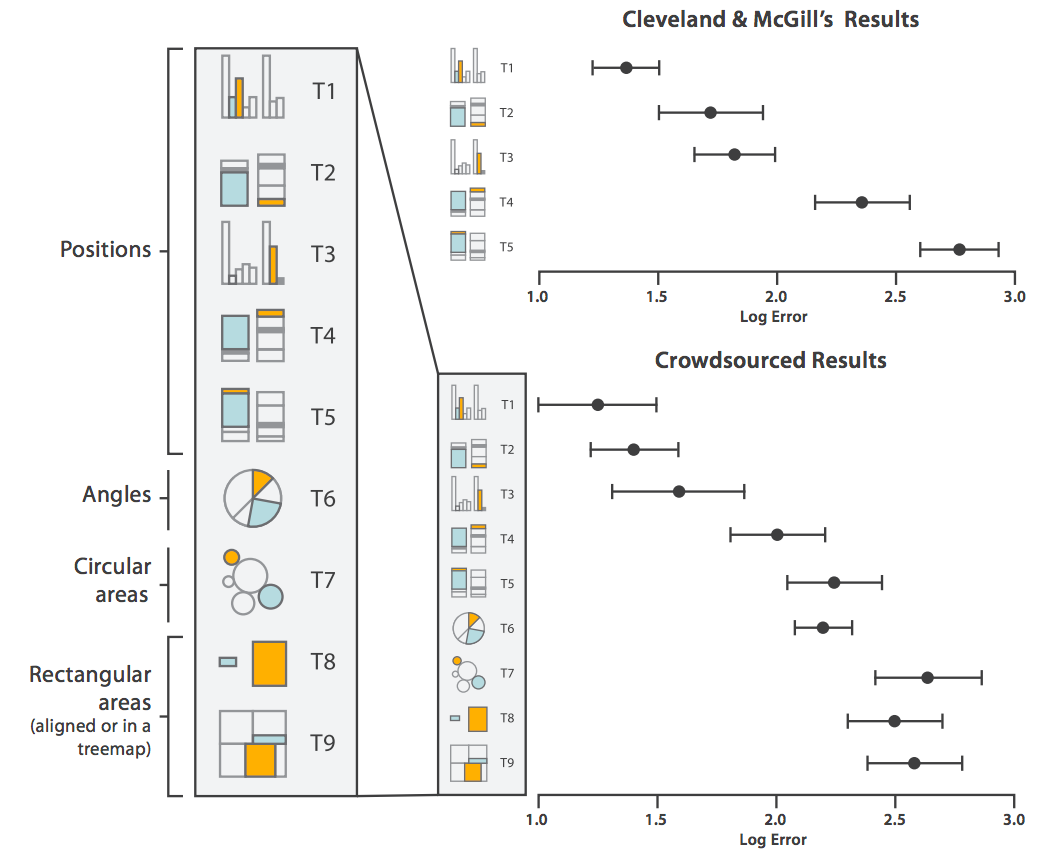

Accuracy

Accuracy

Accuracy

Implications for design: be mindful of the ranking of visual variables. Use the highest ranked channels for the most important information whenever possible.

Keep in mind: position and thus spatial layout is the king of visual channels. Think about how you use space first.

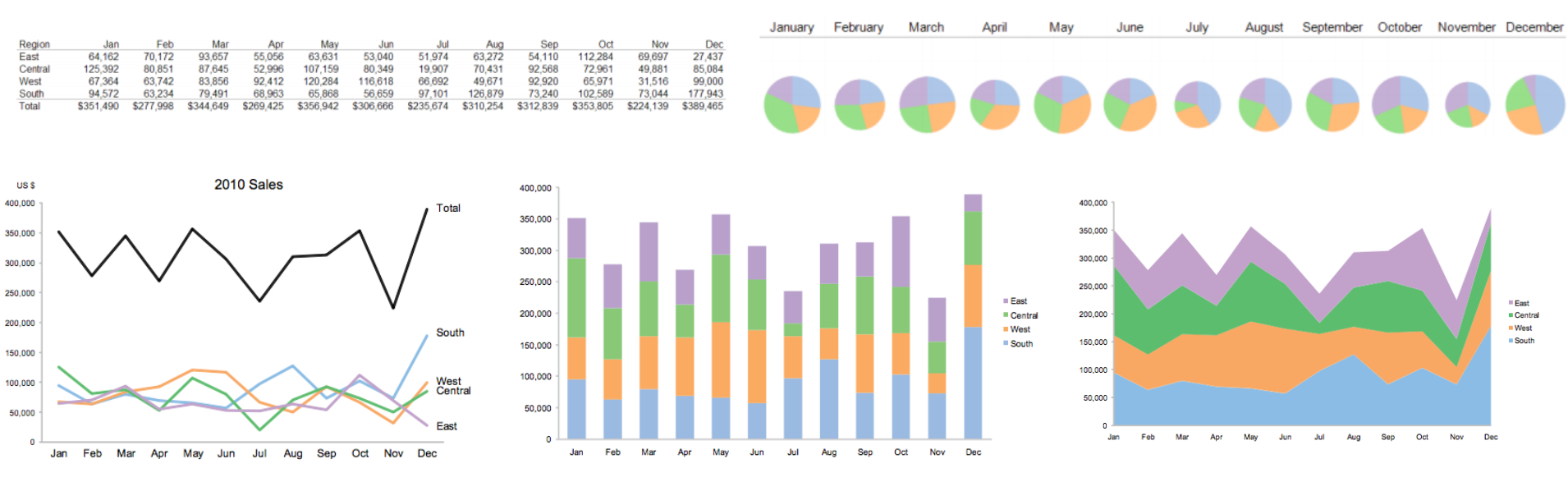

Accuracy

Source: Stephen Few

- Which one is more effective to compare sale trends across regions? Why?

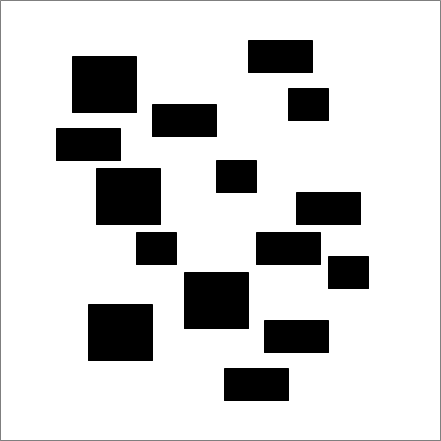

Discriminability

- How many values can we distinguish for any given channel?

- Rule: the number of available bins should match the number of bins we want to be able to see from the data

Discriminability

Implications for design: do not overestimate the number of available bins. For most visual channels, the number is surprisingly low.

When you have too many categories, find a way to group/bin the data further

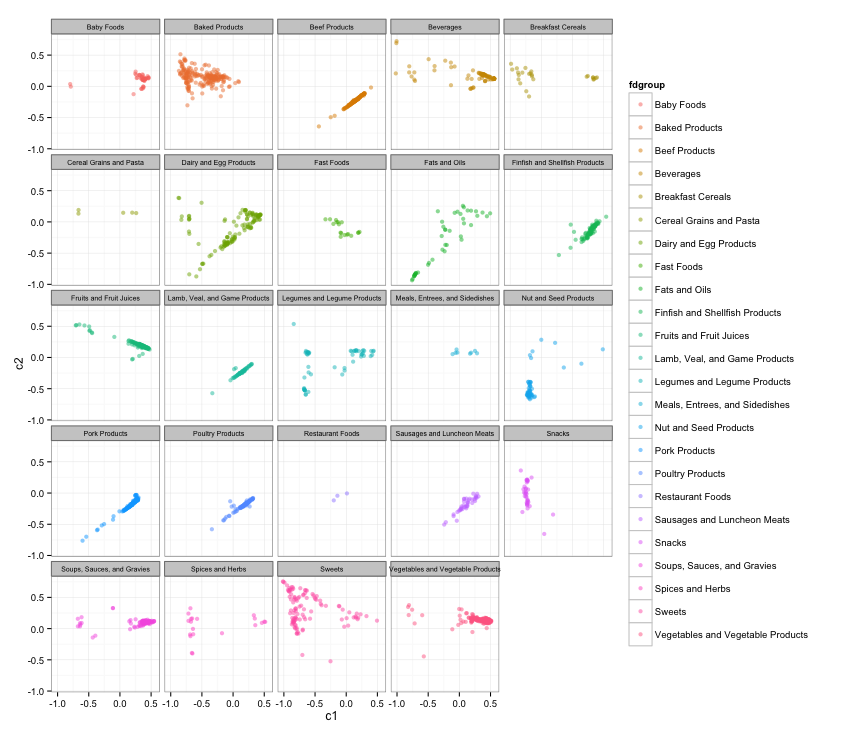

Discriminability

... or switch channels! (E.g. color-space trade-off)

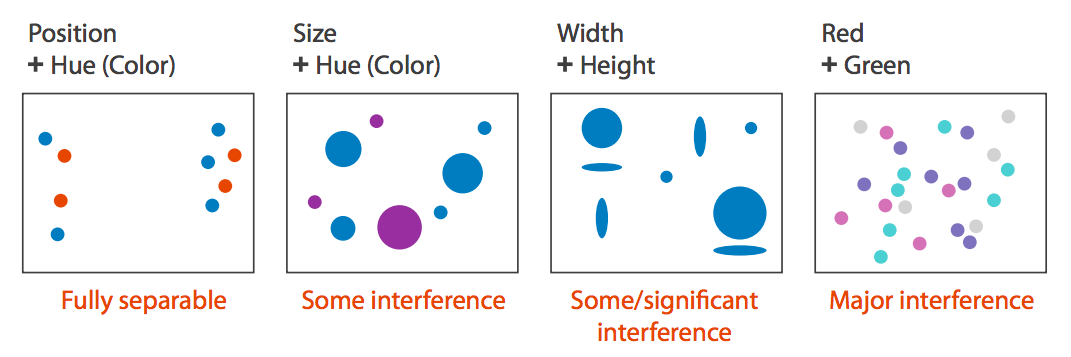

Separability

Separability

Width + height Shape + color Position + color

Separability

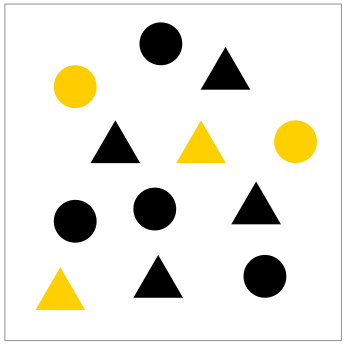

Separability, Popout

Implications for design: do not encode data with too many non-spatial visual channels.

Use separable dimensions.

To direct attention, use preattentive features.

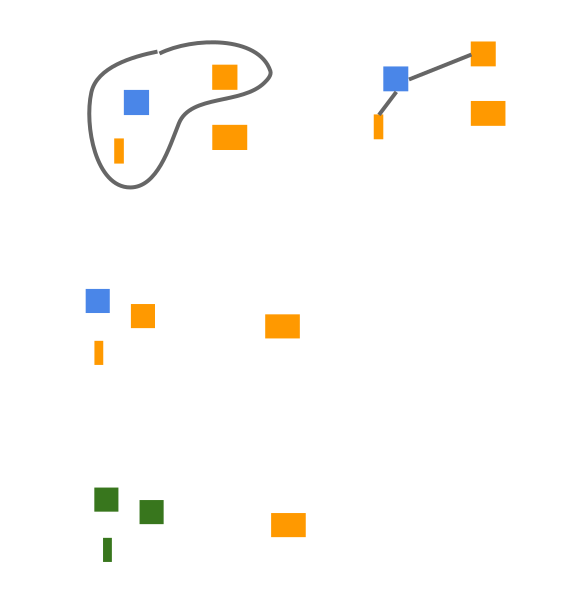

Grouping

- Containment and connection

- Proximity

- Similarity (identity)

Summary

Summary

- Visual encoding is the (principled) way in which data (items and attributes) is mapped to visual structures (marks and channels).

Summary

Visual encoding is the (principled) way in which data (items and attributes) is mapped to visual structures (marks and channels).

Visual information should express all and only the information in the data, highlighting the important bits with effective channels.

Summary

Visual encoding is the (principled) way in which data (items and attributes) is mapped to visual structures (marks and channels).

Visual information should express all and only the information in the data, highlighting the important bits with effective channels.

Channel effectiveness depends on accuracy, discriminability, separability, popout, and grouping, and there is a ranking based on scientific experiments to keep in mind for every visualization.